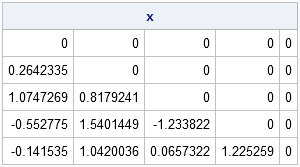

The Bareiss algorithm can be represented as: without deviation accumulation, it quite an important feature from the standpoint of machine arithmetic. īy the way, the fact that the Bareiss algorithm reduces integral elements of the initial matrix to a triangular matrix with integral elements, i.e. It seems good, but there is a problem of an element value increase during the calculationsīareiss offered to divide the expression above by and showed that where the initial matrix elements are the whole numbers then the resulting number will be whole. Then you have to subtract, multiplyied by without any division. How can you get rid of the division? By multiplying the row by before subtracting. However, there is a radical modification of the Gauss method – the Bareiss method. As the name implies, before each stem of variable exclusion the element with maximum value is searched for in a row (entire matrix) and the row permutation is performed, so it will change places with. These modifications are the Gauss method with maximum selection in a column and the Gauss method with a maximum choice in the entire matrix. They are based on the fact that the larger the denominator the lower the deviation. So the result won't be precise.įor the deviation reduction, the Gauss method modifications are used. Secondly, during the calculation the deviation will rise and the further, the more. Firstly, if a diagonal element equals zero, this method won't work. It seems to be a great method, but there is one thing – its division by occurring in the formula. In a generalized sense, the Gauss method can be represented as follows: How can you zero the variable in the second equation?īy subtracting the first one from it, multiplied by a factor It is calso called Gaussian elimination as it is a method of the successive elimination of variables, when with the help of elementary transformations the equation systems are reduced to a row echelon (or triangular) form, in which all other variables are placed (starting from the last).

The Gauss method is a classical method for solving systems of linear equations. This row-reduction algorithm is referred to as the Gauss method. It's clear that first we'll find, then, we substitute it to the previous equation, find and so on – moving from the last equation to the first.

To explain we will use the triangular matrix above and rewrite the equation system in a more common form (I've made up column B): with the corresponding column B transformation you can do so called "backsubstitution". And, if you remember that the systems of linear algebraic equations are only written in matrix form, it means that the elementary matrix transformations don't change the set of solutions of the linear algebraic equations system, which this matrix represents.īy triangulating the AX=B linear equation matrix to A'X = B' i.e.

0 kommentar(er)

0 kommentar(er)